We have had a number of questions regarding the diagram comparison support in EMF Compare 2. This is something that was (somewhat) provided with EMF Compare 1.3, but that we have yet to port to the new version of the APIs.

Well, the work is ongoing and preliminary support for diagram comparison is available in the current integration builds. The port was done in the context of the modeling platform working group and is mainly targetting Papyrus, though ecoretools diagrams (and plain GMF diagrams) should also be supported.

We have not fully settled down on how to represent deletions on a diagram, but this otherwise begins to take form :

Do note that this is still very rough, and merging some of these differences (mainly "label changes") graphically is still problematic, but we expect this to be fine-tuned for Kepler's release :).

Stay tuned!

Friday, November 30, 2012

Wednesday, October 17, 2012

EMF Compare scalability - take two

Exactly one year ago, I published here the results of the measuring and enhancing of EMF Compare 1.3 scalability in which I detailed how we greatly improved the comparison times, and how we had observed that EMF Compare was a glutton in terms of memory usage. At the time, we did isolate a number of improvement axes... And they were part of what made us go forward to a new major version, EMF Compare 2.0.

Now that 2.0 is out of the oven, and that we are rid of the 1.* stream limitations, it was time to move on to the next step and make sure we could scale to millions! We continued the work started last year as part of the modeling platform working group on the support for logical resources in EMF Compare.

The main problem was that EMF Compare needed to load two (or three, for comparisons with VCSs) times the whole logical model to compare. For large models, that quickly reached up to huge amount of required heap space. The idea was to be able to determine beforehand which of the fragments really needed to be loaded : if a model is composed of 1000 fragments, and only 3 out of this thousand have changed, it is a huge waste of time and memory to load the 997 others.

To this end, we tried with EMF Compare 1.3 to make use of Eclipse Team's "logical model" APIs. This was promising (as shown in last year's post about our performances then), but limited since the good behavior of this API depends on the VCS providers to properly use them. In EMF Compare 2, we decided on circumventing this limitation by using our own implementation of a logical model support (see the wiki for more on that).

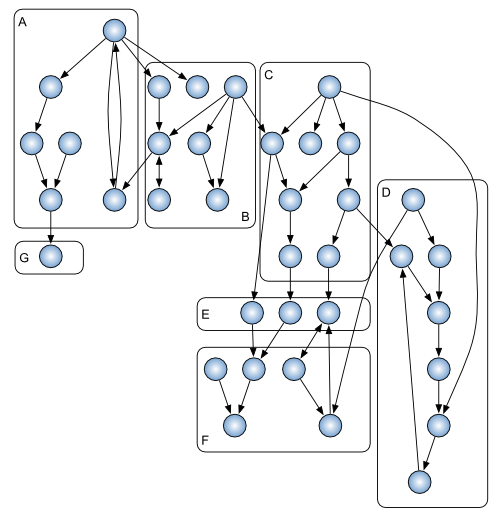

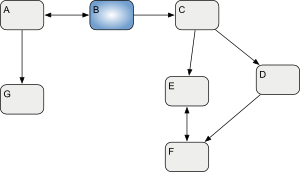

As a quick explanation, an EMF model can be seen as a set of elements referencing each other. This is what we call a "logical model" :

However, these elements are not always living in memory. When they are serialized on disk, they may be saved into a single physical file, or they can be split across multiple files, as long as the references between these files can be resolved :

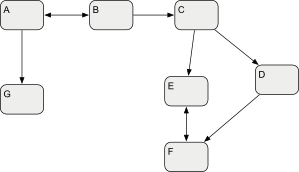

If we remain at the file level, this logical model can be seen as a set of 7 files :

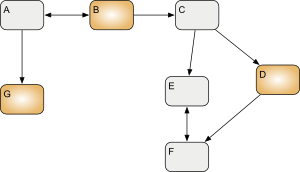

Now let's suppose that we are trying to compare a modified copy (left) of this model with a remote version (right). This requires a three-way comparison of left, right and their common ancestor, origin. That would mean loading all 21 files in memory in order to recompose the logical model. However, suppose that only some of these files really did change (blue-colored hereafter) :

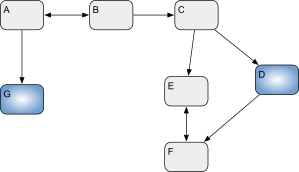

In such a case, what we need to load is actually a small part of the 21 total files. For each three sides of the comparison, we only need to load the ones that can potentially show differences :

Which means that we only need to load 9 out of the 21 files, for 58% less memory than what EMF Compare 1 needed (if we consider all files to be of equal "weight"). Just imagine if the whole logical model was composed of a thousand fragments instead of 7, allowing us to load only 9 files out of the total 3000!

With these enhancements, we decided to run the very same tests as what we did last year with EMF Compare 1.3. Please take a look at my old post for the three sample models' structure and the test modus operandi. I will only paste here as a reminder :

The models' structure :

And the Time and memory used required to compare each of the model sizes with EMF Compare 1.3 :

And without further ado, here is how these figures look like with EMF Compare 2.1 :

In short, EMF Compare now scales much more smoothly than it did with the 1.* stream, and it requires a lot less memory than it previously did. Furthermore, the one remaining time sink are the I/O and model parsing activities. The comparison in itself being reduced to only a few seconds.

For those interested, you can find a much more detailed description of this work and the decomposition of the comparison process on the wiki.

Now that 2.0 is out of the oven, and that we are rid of the 1.* stream limitations, it was time to move on to the next step and make sure we could scale to millions! We continued the work started last year as part of the modeling platform working group on the support for logical resources in EMF Compare.

The main problem was that EMF Compare needed to load two (or three, for comparisons with VCSs) times the whole logical model to compare. For large models, that quickly reached up to huge amount of required heap space. The idea was to be able to determine beforehand which of the fragments really needed to be loaded : if a model is composed of 1000 fragments, and only 3 out of this thousand have changed, it is a huge waste of time and memory to load the 997 others.

To this end, we tried with EMF Compare 1.3 to make use of Eclipse Team's "logical model" APIs. This was promising (as shown in last year's post about our performances then), but limited since the good behavior of this API depends on the VCS providers to properly use them. In EMF Compare 2, we decided on circumventing this limitation by using our own implementation of a logical model support (see the wiki for more on that).

As a quick explanation, an EMF model can be seen as a set of elements referencing each other. This is what we call a "logical model" :

However, these elements are not always living in memory. When they are serialized on disk, they may be saved into a single physical file, or they can be split across multiple files, as long as the references between these files can be resolved :

If we remain at the file level, this logical model can be seen as a set of 7 files :

Now let's suppose that we are trying to compare a modified copy (left) of this model with a remote version (right). This requires a three-way comparison of left, right and their common ancestor, origin. That would mean loading all 21 files in memory in order to recompose the logical model. However, suppose that only some of these files really did change (blue-colored hereafter) :

|  |

| Left | Right |

Which means that we only need to load 9 out of the 21 files, for 58% less memory than what EMF Compare 1 needed (if we consider all files to be of equal "weight"). Just imagine if the whole logical model was composed of a thousand fragments instead of 7, allowing us to load only 9 files out of the total 3000!

With these enhancements, we decided to run the very same tests as what we did last year with EMF Compare 1.3. Please take a look at my old post for the three sample models' structure and the test modus operandi. I will only paste here as a reminder :

The models' structure :

| Small | Nominal | Large | ||

| Fragments | 99 | 399 | 947 | |

| Size on disk (MB) | 1.37 | 8.56 | 49.9 | |

| Packages | 97 | 389 | 880 | |

| Classes | 140 | 578 | 2,169 | |

| Primitive Types | 581 | 5,370 | 17,152 | |

| Data Types | 599 | 5,781 | 18,637 | |

| State Machines | 55 | 209 | 1,311 | |

| States | 202 | 765 | 10,156 | |

| Dependencies | 235 | 2,522 | 8,681 | |

| Transitions | 798 | 3,106 | 49,805 | |

| Operations | 1,183 | 5,903 | 46,029 | |

| Total element count | 3,890 | 24,623 | 154,820 |

And the Time and memory used required to compare each of the model sizes with EMF Compare 1.3 :

| Small | Nominal | Large | ||

| Time (seconds) | 6 | 22 | 125 | |

| maximum Heap (MB) | 555 | 1,019 | 2,100 |

And without further ado, here is how these figures look like with EMF Compare 2.1 :

| Small | Nominal | Large | ||

| Time (seconds) | 5 | 13 | 48 | |

| maximum Heap (MB) | 262 | 318 | 422 |

In short, EMF Compare now scales much more smoothly than it did with the 1.* stream, and it requires a lot less memory than it previously did. Furthermore, the one remaining time sink are the I/O and model parsing activities. The comparison in itself being reduced to only a few seconds.

For those interested, you can find a much more detailed description of this work and the decomposition of the comparison process on the wiki.

Friday, September 14, 2012

EMF Compare 2 is available

On behalf of the team, I am very proud to announce that the 2.0 release of EMF Compare is now available and can be downloaded from its update site. This release is compatible with Eclipse 3.5, 3.6, 3.7, 3.8 and 4.2.

This new version is an overhaul of the project and as such contains too many enhancements to really list. You can refer to the project plan for an overview of the major themes adressed by this new release, or to my previous post for more information on why we decided to rewrite EMF Compare.

API compatibility between the 1.3 and 2.0 releases is not assured. The new APIs provided with 2.0 were developed in order to be simpler and more intuitive. A migration guide is not yet available, but we will provide all possible help in order to bridge the gap between the two streams' APIs and help users migrate to the new and improved Compare.

Do not hesitate to drop by on the forum for any question regarding this release!

This new version is an overhaul of the project and as such contains too many enhancements to really list. You can refer to the project plan for an overview of the major themes adressed by this new release, or to my previous post for more information on why we decided to rewrite EMF Compare.

API compatibility between the 1.3 and 2.0 releases is not assured. The new APIs provided with 2.0 were developed in order to be simpler and more intuitive. A migration guide is not yet available, but we will provide all possible help in order to bridge the gap between the two streams' APIs and help users migrate to the new and improved Compare.

Do not hesitate to drop by on the forum for any question regarding this release!

Thursday, August 2, 2012

EMF Compare 2.0 on the launching pad!

This year has been a really important one for us at Obeo to think about our current products and how to improve them. Eclipse Juno was a big investment for us, with a lot of modeling projects in the release train : Acceleo, EMF Compare, EEF, ATL, Mylyn Intent...

EMF Compare is the one that got us thinking the most, and we ended up developping two versions in parallel during the development phase of Juno. First is the version we actually included in the release train, 1.3.1, which is now the maintenance stream.

This version includes a number of new developments and enhancements. Most notably, the detection and merging of differences on values ordering has been greatly improved, and the merging in general was one of our major endeavors this year, fixing thousands of corner cases. Please refer to the release highlights for the noteworthy changes of this version.

However, the 6 years of development spent on EMF Compare allowed us to identify a number of limitations in the architecture and overall design of the project, most of which cannot be worked around or re-designed without breaking the core of the comparison engine. We needed a complete overhaul of the project, and thus the second version we've been developping through the past year is the 2.0.0.

Version 2 has been started as part of the work sponsored in the context of the Modeling Platform Working Group. Its two main objectives are to :

Version 2.0.0 of EMF Compare will be out in the first half of august. It focuses on the comparison of models presenting IDs of some sort (XMI ID, technical ID, ...). The design and architecture have both been remasterized with all the experience accumulated on the 1.* stream, greatly improving the user experience, performance, memory footprint and reusability of the tool. We've submitted a talk for Eclipse con Europe 2012 focussing on the intended improvements of this version. Do not hesitate to comment there if you're interested in it!

Version 2.1.0 is scheduled shortly after that, sometime during september. That new version will leverage the new architecture to provide the scalability improvements mandatory for very large model comparisons. In short, EMF Compare will be able to function with time and memory footprints dependent on the number of actual differences instead of being dependent on the input models' size.

EMF Compare is the one that got us thinking the most, and we ended up developping two versions in parallel during the development phase of Juno. First is the version we actually included in the release train, 1.3.1, which is now the maintenance stream.

This version includes a number of new developments and enhancements. Most notably, the detection and merging of differences on values ordering has been greatly improved, and the merging in general was one of our major endeavors this year, fixing thousands of corner cases. Please refer to the release highlights for the noteworthy changes of this version.

However, the 6 years of development spent on EMF Compare allowed us to identify a number of limitations in the architecture and overall design of the project, most of which cannot be worked around or re-designed without breaking the core of the comparison engine. We needed a complete overhaul of the project, and thus the second version we've been developping through the past year is the 2.0.0.

Version 2 has been started as part of the work sponsored in the context of the Modeling Platform Working Group. Its two main objectives are to :

- lift the architectural and technical limitations of EMF Compare so that we can reduce its memory footprint while improving its overall performance, and

- simplify the API we offer to our adopters so that launching comparisons or exploiting the difference model can be done more effectively.

Version 2.0.0 of EMF Compare will be out in the first half of august. It focuses on the comparison of models presenting IDs of some sort (XMI ID, technical ID, ...). The design and architecture have both been remasterized with all the experience accumulated on the 1.* stream, greatly improving the user experience, performance, memory footprint and reusability of the tool. We've submitted a talk for Eclipse con Europe 2012 focussing on the intended improvements of this version. Do not hesitate to comment there if you're interested in it!

Version 2.1.0 is scheduled shortly after that, sometime during september. That new version will leverage the new architecture to provide the scalability improvements mandatory for very large model comparisons. In short, EMF Compare will be able to function with time and memory footprints dependent on the number of actual differences instead of being dependent on the input models' size.

Friday, January 20, 2012

Traceability test case : UML to Java generation

The next version of Acceleo will introduce a number of improvements of its existing features, one of its most important, the automatic builder, being entirely re-written in order to get rid of legacy code and improve the user experience. This also comes with a much better experience for users that need to build their Acceleo generators in standalone, through maven or tycho. More news on this are available on the bugzilla and Stephane's latest post.

One of the least visible features, yet one I find among the most interesting aspects of the Acceleo code generator, is the traceability between the generated code, the model used as input of the generation, and the generator itself. Basically, you always know where that esoteric line of code came from : which part of the generation template generated it, and which model element triggered its generation.

This feature has known a series of improvements as we used it intensively with the UML to java generator. We are now confident that we can record and display accurate information even for some of the most complex use cases. This generator isn't the most complex generator we could write with Acceleo, but it is quite complete nonetheless. Here are some examples of what the Traceability can provide to architects developping their code generators :

Determine which model element triggered the generation of a line of code

On the left-hand side, a file that has been generated. On the right-hand side, an editor opened as result of using the action "open input" : the model is opened and the exact element that was used to generate the part of code is selected.

Find the part of a generator that created a given part of the code

On the left-hand side, the same generated file as above. On the right-hand side, the result of using the "open generator" action : the Acceleo generator which generated that selected part of the code is opened, with the exact source expression selected.

Real-time Synchronization

These are but a few of the features that can be derived from the synchronization between code, model, and generators. Some more examples include : previewing the result of a re-generation, incremental generation, round-trip (updating the input model according to manual changes in the output generated code)...

Most of these features are better seen in video to get an idea. If you want to see some of them in action, some more flash videos of what traceability can do for you are available on the Obeo network (though a free registration is required).

One of the least visible features, yet one I find among the most interesting aspects of the Acceleo code generator, is the traceability between the generated code, the model used as input of the generation, and the generator itself. Basically, you always know where that esoteric line of code came from : which part of the generation template generated it, and which model element triggered its generation.

This feature has known a series of improvements as we used it intensively with the UML to java generator. We are now confident that we can record and display accurate information even for some of the most complex use cases. This generator isn't the most complex generator we could write with Acceleo, but it is quite complete nonetheless. Here are some examples of what the Traceability can provide to architects developping their code generators :

Determine which model element triggered the generation of a line of code

On the left-hand side, a file that has been generated. On the right-hand side, an editor opened as result of using the action "open input" : the model is opened and the exact element that was used to generate the part of code is selected.

Find the part of a generator that created a given part of the code

On the left-hand side, the same generated file as above. On the right-hand side, the result of using the "open generator" action : the Acceleo generator which generated that selected part of the code is opened, with the exact source expression selected.

Real-time Synchronization

These are but a few of the features that can be derived from the synchronization between code, model, and generators. Some more examples include : previewing the result of a re-generation, incremental generation, round-trip (updating the input model according to manual changes in the output generated code)...

Most of these features are better seen in video to get an idea. If you want to see some of them in action, some more flash videos of what traceability can do for you are available on the Obeo network (though a free registration is required).

Subscribe to:

Posts (Atom)